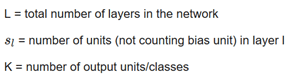

Now, the task remains on finding the weights (theta) for the neural network. First, we need a cost function. Let us declare some variables as follows:

Furthermore, h(x)k represents the hypothesis for the kth element in the final output layer. This might not make a whole lot of sense, but hopefully the couple of figures below can help with your understanding.

The logistic regression regularized cost function formula is (please read my article on the derivation of this seemingly complex function):

Now, because the final layer can consist of multiple layers in the neural network, we will have to change this function. For example, if there is a task to identify an object as a car, motorcycle, or truck, there would be 3 elements in the final output layer, so 3 hypothesis would have to be created. Hence, for the cost function to account for all that we sum the cost for each of the k output layers (First part of the equation below).

The second part of this equation, with the three summations, is basically calculating the sum of all theta squared through all the layers but excluding the bias node. It is just some tricky math, but no need to really understand it mathematically, if you understand conceptually what it is doing.

Now to use gradient descent, or one of the more complex minimizing algorithms, you need to give the program a way to calculate the partial derivatives of the cost function. In neural networks, to help minimize the cost function, we use something called “backpropagation”. Look at the figure below, and I will explain it further (please do not stress it is not as complicated as it looks).

Basically, pretend we have a training set with m examples. we create this new variable called capital delta which will be used later to compute the derivation of the cost function (j(theta)). Now iterate through all the training examples, and perform forward propagation. Then, for the very last layer (output layer) calculate the delta which is basically the hypothesis minus the y (what you expect). It is hard to conceptualize how to use delta in neural networks so let us talk more in detail about that. If you look at the figure below

For the third alpha it can be proven, through many complex derivations, the formula is accurate to what is stated. We care about theses deltas because the activation multiplied by the delta of that node will give you the partial derivative of the cost function – exactly what we need! Going back to our algorithm, we store the value in the variable capital delta. At the very end we multiply our results by some constants as shown below:

J cannot be 0 because we do not want to include the bias terms. Remember that theta and lambda are basically regularization terms.