Before we go more into looking at data sets and creating more hypotheses lets hold a quick review on matrices. Basic multiplication, addition, subtraction, and division between vectors and scalars should be pretty easy to grasp from multiple tutorials online. We will primarily go over the properties of matrices in this article. First, matrices are not commutative, so x*y != y * x. However, matrices are associative (x*y)*z = x * (y*z). An identity matrix is a matrix where the diagonal from top left to bottom right are all 1’s and the rest of the values in this square matrix are 0. When you multiply this type of matrix with any other matrix of the same square dimensions, then the matrix will remain the same. Similar to how anything * 1 = anything, anything with the same dimension * identity matrix = anything. Finally, a transpose of a matrix is when you rotate it 90 degrees so that matrix before x, y = matrix transposed y, x.

In previous articles, we have just talked about a hypotheses with theta1x + theta = y, with just one x and one y. What if there were multiple features, like how in a house there are a multitude of factors that play into determining the worth of it. The data table below demonstrates an example:

There is a key with notation about how to represent the data set with some examples to the right using the data set. Now with our newfound knowledge of matrices let’s change the hypothesis to become cleaner. Currently if there are n factors in determining a function, the hypotheses function (the value that the machine learning algorithm outputs) is determined by:

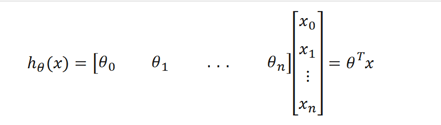

If we have a n+1 x 1 matrix of x0….xn, where x0 is one and we have a matrix of theta0…thetan, the product of theta transposed * the matrix of x would give us the hypotheses, hopefully it is clear to see in the picture below how the transpose of theta and the matrix of x’s will give us our hypotheses with multiple features.

We can now segue into gradient descent with multiple features! There is more content for that topic in another article.